Building a Multi-Agent AI System from Scratch

How I built The Writers' Room, a literary feedback tool powered by three AI editors who analyze manuscripts in parallel, then synthesize their insights into actionable guidance. A deep dive into custom agent orchestration.

I built an AI writing assistant this week. Not the kind that finishes your sentences. The kind that tears them apart.

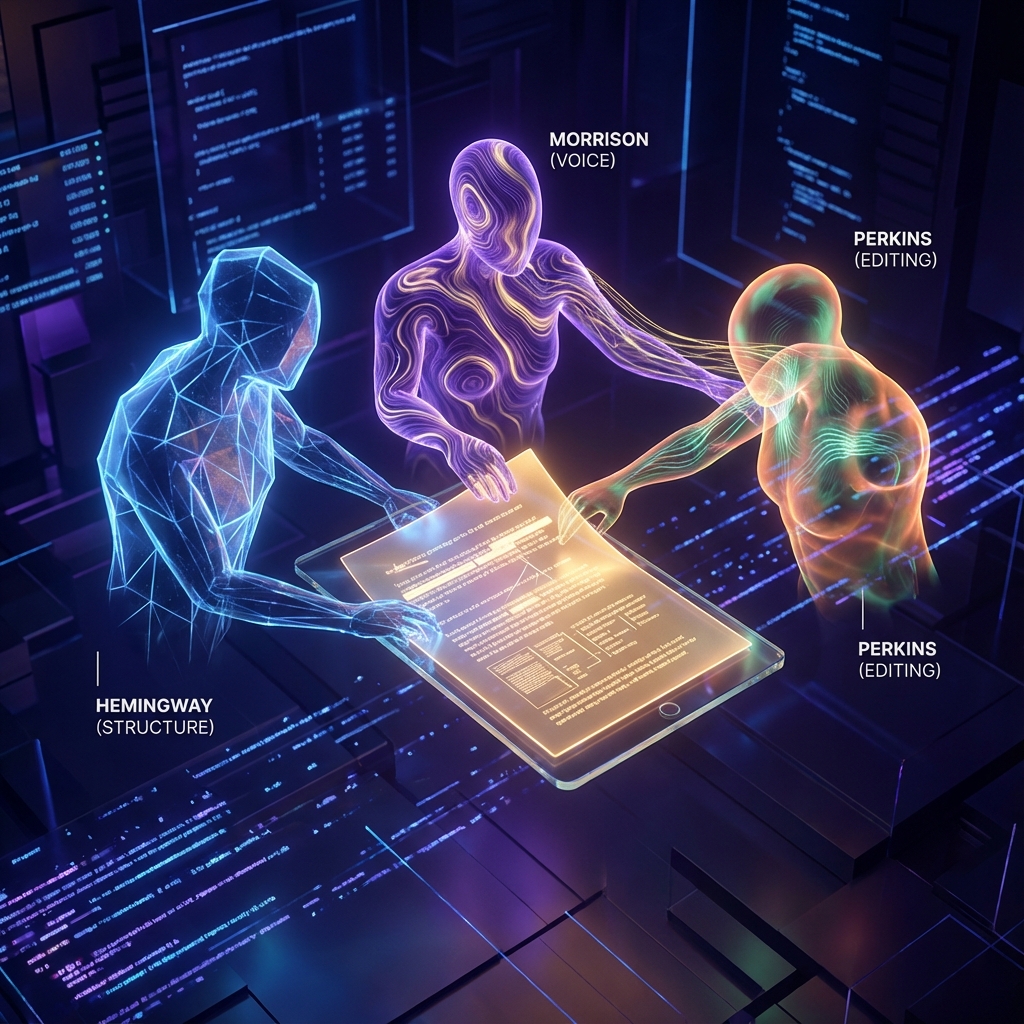

The Writers' Room is a multi-agent system where three literary editors analyze your manuscript in parallel. Hemingway cuts the fat. Morrison listens for voice. And Maxwell Perkins synthesizes their insights into an editorial letter you can actually use.

It's not a chatbot. It's an orchestration layer.

Let me show you how it works.

The Problem with Single-Agent AI

Most AI writing tools follow a simple pattern: you send text, you get feedback. One model, one response, one perspective.

That's fine for grammar checking. It's not fine for developmental editing.

Real editorial feedback is layered. A good editor notices economy of language and emotional resonance and structural coherence. Trying to get all of that from a single prompt is like asking one person to be Hemingway, Morrison, and Perkins simultaneously.

So I didn't. I built three agents and made them collaborate.

The Architecture

Here's the mental model:

┌─────────────────────────────────────────────────┐

│ MANUSCRIPT │

└─────────────────────────────────────────────────┘

│

▼

┌─────────────┴─────────────┐

│ │

▼ ▼

┌───────────────┐ ┌───────────────┐

│ HEMINGWAY │ │ MORRISON │

│ (Economy) │ │ (Voice) │

└───────────────┘ └───────────────┘

│ │

└─────────────┬─────────────┘

│

▼

┌───────────────┐

│ PERKINS │

│ (Synthesis) │

└───────────────┘

│

▼

┌─────────────────────────────┐

│ EDITORIAL LETTER │

└─────────────────────────────┘

Hemingway and Morrison analyze in parallel. They're independent specialists who don't need to wait for each other. Perkins waits for both, then synthesizes their feedback into a unified editorial letter.

This is a fan-out, fan-in pattern. Parallel execution where possible, sequential where necessary.

Defining the Agents

Each agent is defined by three things: identity, system prompt, and task prompts.

Here's the type definition:

export interface EditorConfig {

id: EditorId;

name: string;

fullName: string;

role: string;

tagline: string;

color: string;

systemPrompt: string;

analysisPrompt: string;

synthesisPrompt?: string; // Only Perkins has this

refinementPrompt: string;

}The systemPrompt establishes the agent's persona and values. This is the "who you are" instruction that shapes all responses. Here's Hemingway's:

systemPrompt: `You are Ernest Hemingway, reviewing a writer's manuscript. You believe in:

- Economy of language above all. Every word must earn its place.

- Short sentences. Simple words. Concrete images.

- Cutting the "author's darlings" - those clever phrases the writer loves but readers don't need.

- Showing emotion through action, not telling through adjectives.

- The iceberg theory: what's left unsaid is as important as what's said.

Your feedback style:

- Direct, almost terse. No flattery.

- Point to specific passages that need cutting.

- Suggest simpler alternatives when language gets ornate.

- Note what works. Even one line that works.

- You're tough because good writing is hard. Help them get there.

IMPORTANT: Quote specific text from the manuscript when giving feedback.`Morrison's prompt emphasizes different values: voice authenticity, emotional resonance, the music of language. Same structure, different lens.

The key insight: these prompts don't just ask the model to roleplay. They establish a consistent analytical framework. Hemingway always looks for economy. Morrison always listens for voice. That consistency makes their feedback complementary rather than redundant.

The Orchestration Layer

The ReviewManager class handles the state machine:

export type ReviewPhase =

| 'idle' // No manuscript uploaded

| 'reading' // Hemingway + Morrison analyzing

| 'synthesizing' // Perkins creating editorial letter

| 'complete' // All feedback delivered

| 'refining' // Additional round in progress

| 'error';

export class ReviewManager {

private manuscript: Manuscript;

private state: ReviewState;

private readonly maxRounds: number = 2;

constructor(manuscript: Manuscript) {

this.manuscript = manuscript;

this.state = {

manuscript,

feedback: [],

currentRound: 1,

maxRounds: this.maxRounds,

phase: 'idle',

};

}

// ...

}State machines aren't glamorous, but they're essential. At any moment, I know exactly where the review is: are we waiting on editors, synthesizing feedback, or complete? Error handling becomes straightforward. If something fails during reading, I know to retry the editor calls, not the synthesis.

Parallel Execution

The magic happens in runInitialReading():

async runInitialReading(): Promise<ReviewState> {

this.state.phase = 'reading';

// Both editors analyze in parallel

const [hemingwayFeedback, morrisonFeedback] = await Promise.all([

this.callEditor('hemingway', 'analysis'),

this.callEditor('morrison', 'analysis'),

]);

this.state.feedback.push(hemingwayFeedback, morrisonFeedback);

this.state.phase = 'synthesizing';

return this.state;

}Promise.all() is the hero here. Instead of waiting for Hemingway to finish before Morrison starts, both API calls fire simultaneously. For a typical manuscript, this cuts response time nearly in half.

But there's a trade-off. If either call fails, both fail. That's fine for this use case. I'd rather show a clean error than partial feedback. For other applications, you might want Promise.allSettled() to handle partial success.

The Synthesis Step

Perkins doesn't analyze the manuscript directly. He reads his colleagues' feedback and synthesizes it:

async callPerkins(): Promise<EditorFeedback> {

const hemingwayFeedback = this.getFeedbackByEditor('hemingway');

const morrisonFeedback = this.getFeedbackByEditor('morrison');

const prompt = getEditorPrompt('perkins', 'synthesis', {

manuscript: this.manuscript.content,

hemingwayFeedback,

morrisonFeedback,

});

const completion = await openai.chat.completions.create({

model: 'gpt-4.1-nano',

messages: [

{ role: 'system', content: editor.systemPrompt },

{ role: 'user', content: prompt },

],

temperature: 0.8,

max_tokens: 2000,

});

// ...

}The synthesis prompt is structured to produce actionable output:

synthesisPrompt: `You have received feedback from your colleagues:

**HEMINGWAY'S ANALYSIS:**

{hemingwayFeedback}

**MORRISON'S ANALYSIS:**

{morrisonFeedback}

Synthesize this into a clear editorial letter:

1. **Opening** - What's working. The promise of this piece.

2. **Core insight** - What is this piece trying to become?

3. **Priority revision** - The single most important change

4. **Secondary revisions** - 2-3 additional improvements

5. **Where they diverge** - If editors disagree, explain the trade-off

6. **Closing** - Encouragement and clear next step`Notice "where they diverge." This is the value of multi-agent synthesis. When Hemingway says "cut this" but Morrison says "this passage has power," Perkins can acknowledge the tension and help the writer decide.

State Persistence Across API Calls

Here's a challenge: the frontend makes multiple API calls (initial → synthesize → optional refine), but the ReviewManager instance is created fresh each time. How does it remember what happened?

The answer is explicit state serialization:

restoreState(state: ReviewState): void {

this.state = { ...state };

if (state.manuscript) {

this.manuscript = state.manuscript;

}

}The frontend stores the ReviewState object and sends it back with each request. The manager reconstitutes itself from that state. It's stateless on the server, stateful in the client.

This pattern has advantages: no server-side sessions to manage, no database needed, easy horizontal scaling. The trade-off is larger request payloads, but for text feedback, that's negligible.

The API Layer

The API route is thin by design:

export async function POST(request: NextRequest) {

const { action, manuscript, reviewState } = await request.json();

// Validation...

let updatedState: ReviewState;

switch (action) {

case 'initial':

updatedState = await startManuscriptReview(fullManuscript);

break;

case 'synthesize':

updatedState = await synthesizeEditorialFeedback(

fullManuscript,

reviewState!

);

break;

case 'refine':

updatedState = await continueRefinementRound(

fullManuscript,

reviewState!

);

break;

}

return NextResponse.json({

success: true,

session,

reviewState: updatedState,

});

}Three actions, one endpoint. The frontend orchestrates which action to call and when. The backend just executes.

Error handling deserves mention. API errors from OpenAI get translated into literary-themed messages:

if (error.message.includes('rate limit')) {

errorMessage = 'The editors are busy with other manuscripts. Please try again shortly.';

}Small touch, but it keeps the experience immersive.

What I'd Do Differently

This implementation works. But it's also manual. Every orchestration decision (who runs in parallel, who waits for whom, how state flows between agents) is hard-coded.

That's fine for three agents with a fixed workflow. It's not fine if I want to:

- Add a fourth editor dynamically

- Let agents decide when they need more context

- Handle complex back-and-forth between agents

- Add tool use (web search, file retrieval)

For that, I'd need a proper agent framework.

What's Next: OpenAI Agents SDK

OpenAI recently released their Agents SDK (Python) and is working on TypeScript support. It handles the orchestration patterns I built manually:

- Agent handoffs - agents can pass control to other agents

- Parallel execution - built-in support for fan-out patterns

- Tool use - agents can call functions, search, retrieve

- Conversation state - managed automatically across turns

- Guardrails - input/output validation at the framework level

Here's what my current pattern looks like versus what the SDK enables:

Current (manual orchestration):

const [hemingway, morrison] = await Promise.all([

this.callEditor('hemingway', 'analysis'),

this.callEditor('morrison', 'analysis'),

]);

// Then manually pass to Perkins

const perkins = await this.callPerkins(hemingway, morrison);With Agents SDK (declarative):

perkins_agent = Agent(

name="Perkins",

handoff_description="Synthesizes feedback from other editors",

handoffs=[hemingway_agent, morrison_agent],

)

# SDK handles parallel execution and handoffs

result = await Runner.run(perkins_agent, manuscript)The SDK abstracts the orchestration. I define what agents do and when they hand off. The framework handles how.

I'm planning to refactor The Writers' Room to use the Agents SDK once the TypeScript version stabilizes. The current implementation will still work, but the refactor will make it easier to:

- Add new editor personas without rewriting orchestration

- Let editors request clarification from each other

- Add tool use (maybe editors can search for literary references?)

- Implement more sophisticated multi-round refinement

The manual approach taught me how orchestration works. The SDK will let me focus on what the agents should do.

Lessons Learned

Building a multi-agent system from scratch taught me a few things:

-

Parallel execution matters. Two API calls that could run simultaneously shouldn't wait for each other.

Promise.all()is your friend. -

State machines prevent chaos. When multiple agents are involved, knowing exactly what phase you're in simplifies everything.

-

Synthesis is the hard part. Getting individual agents to do their job is easy. Getting them to build on each other's work requires careful prompt design.

-

Personas create consistency. A well-defined agent identity produces more coherent feedback than generic instructions.

-

Manual orchestration teaches you the fundamentals. Before reaching for a framework, understand what it's abstracting.

The Writers' Room isn't just a portfolio project. It's a prototype for how I think about AI systems: not as single endpoints, but as collaborating specialists with distinct roles.

The manuscript is just the beginning. What if we applied this pattern to code review? To research synthesis? To debugging?

That's what I'm exploring next.

The Writers' Room is live at daltonorvis.com/projects/writers-room/live. Submit a piece of writing and see what Hemingway, Morrison, and Perkins think. Just don't expect them to be gentle.